Generative AI, the ability of artificial intelligence to create new content, is a rapidly advancing field with tremendous potential across industries. By integrating generative AI with the Amazon Web Services (AWS) Cloud, businesses and individuals can unlock a wealth of opportunities. This blog post explores the background, benefits, and practical implications of combining generative AI with AWS Cloud, providing a comprehensive understanding of this powerful technology.

Generative AI encompasses a range of techniques that generate new data samples

resembling the training set. Its applications span various domains, including natural

language processing, image and video synthesis, drug discovery, and personalized content

creation. While discriminative models have been widely used to differentiate data types,

generative models excel at creating novel and realistic samples. However, the potential

of generative AI has been hindered by computational complexity, resource requirements,

and the need for scalable, secure infrastructure.

Generative AI models are trained on large datasets and learn patterns and relationships

within the data. This knowledge enables them to generate new content, such as text,

images, music, and videos. The applications of generative AI are extensive, ranging from

marketing content creation and lead generation to code writing and entertainment media

generation. It offers a powerful solution to numerous real-world problems.

Generative AI is a type of AI that can create new content and ideas, including

conversations, stories, images, videos, and music. Like all AI, generative AI is powered

by ML models—very large models that are pre-trained on vast amounts of data and commonly

referred to as Foundation Models (FMs). Recent advancements in ML (specifically the

invention of the transformer-based neural network architecture) have led to the rise of

models that contain billions of parameters or variables. To give a sense for the change

in scale, the largest pre-trained model in 2019 was 330M parameters. Now, the largest

models are more than 500B parameters—a 1,600x increase in size in just a few years.

Today’s FMs, such as the large language models (LLMs) GPT3.5 or BLOOM, and the

text-to-image model Stable Diffusion from Stability AI, can perform a wide range of

tasks that span multiple domains, like writing blog posts, generating images, solving

math problems, engaging in dialog, and answering questions based on a document. The size

and general-purpose nature of FMs make them different from traditional ML models, which

typically perform specific tasks, like analyzing text for sentiment, classifying images,

and forecasting trends.

AWS Cloud provides a robust platform for overcoming the challenges associated with

generative AI. It offers scalable compute resources, advanced machine learning services,

and efficient data management tools. Researchers and developers can leverage services

like Amazon SageMaker and EC2 instances with GPU accelerators to reduce computational

and infrastructural burdens. Additionally, AWS's data management and storage services

facilitate efficient handling of large datasets required for training generative models.

AWS has the broadest and deepest portfolio of AI and ML services at all three layers of

the stack. They have invested and innovated to offer the most performant, scalable

infrastructure for cost-effective ML training and inference; developed Amazon SageMaker,

which is the easiest way for all developers to build, train, and deploy models; and

launched a wide range of services that allow customers to add AI capabilities like image

recognition, forecasting, and intelligent search to applications with a simple API call.

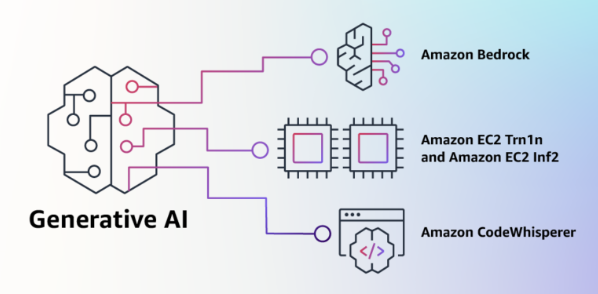

Several AWS services are instrumental in developing and deploying generative AI models:

- Amazon SageMaker: A fully managed machine learning service that simplifies model building, training, and deployment.

- Amazon Bedrock: An API-based service that provides access to a wide range of pre-built generative AI models from leading AI start-ups and Amazon. This is a new service that makes FMs from AI21 Labs, Anthropic, Stability AI, and Amazon accessible via an API. AWS has also released two new Titan LLM’s for use on Bedrock.

- Amazon CodeWisperer: An AI coding companion that uses a FM under the hood to radically improve developer productivity by generating code suggestions in real-time based on developers’ comments in natural language and prior code in their Integrated Development Environment (IDE).

- Amazon EC2 Trn1n and EC2 Inf2: Trn1 instances, powered by Trainium, can deliver up to 50% savings on training costs over any other EC2 instance, and are optimized to distribute training across multiple servers connected with 800 Gbps of second-generation Elastic Fabric Adapter (EFA) networking. The network-optimized Trn1n instances, which offer 1600 Gbps of network bandwidth and are designed to deliver 20% higher performance over Trn1 for large, network-intensive models.

- Amazon Rekognition: A service capable of object, face, and text detection in images and videos.

- Amazon Polly: A service that generates human-like speech from text.

- Amazon Translate: A service that translates text between different languages.

These services empower the creation of generative AI models tailored to specific use cases, such as marketing content generation, realistic image and video synthesis, human-like speech generation, and text translation.

Integrating generative AI with AWS Cloud offers numerous advantages:

- Scalability: AWS Cloud services can scale seamlessly to meet the computational requirements of generative AI models.

- Efficiency: By utilizing AWS services like SageMaker, developers can focus on model design instead of operational issues.

- Accessibility: Amazon Bedrock makes generative AI technology more accessible, enabling small businesses and individuals to leverage its potential.

- Security and Compliance: AWS provides comprehensive security features to safeguard sensitive data and ensure compliance with regulations.

- Cost-Effectiveness: AWS's pay-as-you-go model allows users to pay only for the resources they utilize, providing a cost-effective solution for deploying generative AI.

To implement generative AI on AWS Cloud, a simple strategy can be followed:

- Data Storage and Management: Use services like Amazon S3 for data storage and AWS Glue for ETL operations if necessary.

- Model Development: Employ Amazon SageMaker and its built-in Jupyter notebook instances for model development and debugging. Use the latest LLM’s using Amazon Bedrock, including the Titan LLM’s from AWS.

- Model Training: Utilize EC2 instances equipped with GPU accelerators for training large generative models. The latest for GenAI specifically are the EC2 Trn1n and Inf2 GPU’s.

- Deployment: Deploy the trained model as a SageMaker endpoint for real-time predictions or leverage SageMaker Batch Transform for bulk data processing.

- Monitoring and Maintenance: Employ Amazon CloudWatch to monitor application health and performance. Regularly review and optimize resource usage with AWS Cost Explorer.

Generative AI has vast potential in multiple industries, providing avenues for innovation and growth. By harnessing AWS Cloud's advanced services, scalability, and cost-effectiveness, the implementation of generative AI becomes more attainable for organizations of all sizes. Integrating generative AI with AWS represents a strategic move towards the future of artificial intelligence. This powerful combination enables businesses and individuals to leverage generative AI's capabilities and revolutionize their respective domains.